The satnav effect: Is AI stopping me from learning?

Will the parts of my brain that learn from doing atrophy as I delegate the brainwork to ChatGPT?

“To lose yourself: a voluptuous surrender, lost in your arms, lost to the world, utterly immersed in what is present so that its surroundings fade away. ... to be lost is to be fully present, and to be fully present is to be capable of being in uncertainty and mystery.”

— Rebecca Solnit, A Field Guide to Getting Lost

I

A couple of years ago I started trying to drive without using a satnav (or more precisely, Google Maps on my phone).

I’ve always been suspicion of satnavs. All they do is bark out orders. It seems harmless enough, but I suspect somewhere in my subconscious it’s reinforcing the narrative: ‘better do what this machine tells me to’. I can’t help but feel a step towards the subjugation of humans by machines. Perhaps people felt this way when they introduced traffic lights. Grumble grumble.

But my issue with the satnav was more practical. I noticed I wasn’t learning my way around when driving in the same way I did when walking or cycling.

I remember one day driving with Adriana from central Edinburgh back home to Glasgow using only street signs. An hour later, when we should’ve been arriving home, we turned the corner to be confronted by the sea - the North Sea! That sits between Edinburgh and Denmark, not Edinburgh and Glasgow. But we learnt a lot more about the geography of Edinburgh as a result.

Another time, I added an hour to a trip to London by taking the M77 towards the West coast instead of the M74 south.

Both trips I’d made many times with the satnav. While the satnav held my hand, I was apparently learning very little. It’s basic pedagogy that I’m going to learn better if I have a go at a problem myself before hearing the solution. But it shocks me quite how little I absorb from the steady stream of solutions from the satnav. I won’t even remember the exact route it’s led me down.

This concerns me not just because of the dependency it breeds, but because navigating space is much more than a means to get around. It’s a fundamental metaphor to how we think. It unites the discreteness of places with the continuity of space. Map-reading confronts me with the gulf between the reality I inhabit and a model that attempts to describe it. If I no longer exercise my mind’s ability to navigate the land, does this undermine how I think more generally?

II

Large Language Models like ChatGPT are still ascending the hype curve. In the coding world, it’s felt like there’s talk of little else the past six months. This might be natural given our profession. But I think we're also experiencing more immediately how AI is changing the nature of computer work.

Whereas an AI tool can now displace an entire job for a photographer or illustrator, I’m unaware of this happening so far with a serious coding project. And whereas, say, a written article requires the whole text to remain coherent to an author’s voice, software development has long since evolved into a modular affair well suited for collaboration. When I ask ChatGPT (and its cousin Copilot) for a small snippet of code, I can just copy paste it into my project like a lego brick.

ChatGPT does not know my context. It doesn’t know what I’m trying to achieve here. I have to think of what’s relevant and tell it. And its code is not always correct. I have to verify and test the answers it provides. But it’s more than good enough to dramatically increase the pace at which I can make things.

What is this doing to my mind? I’m getting more done, but am I getting stupider? If OpenAI kicks me off ChatGPT, would it just leave me slower? Or will I be left in the middle of nowhere, clueless about how to continue this work myself?

III

To illustrate this, I’ll share a brief snapshot of my experience coding with ChatGPT. Don’t worry if the technical details feel overwhelming or go over your head. That experience is actually part of what I’m trying to illustrate here.

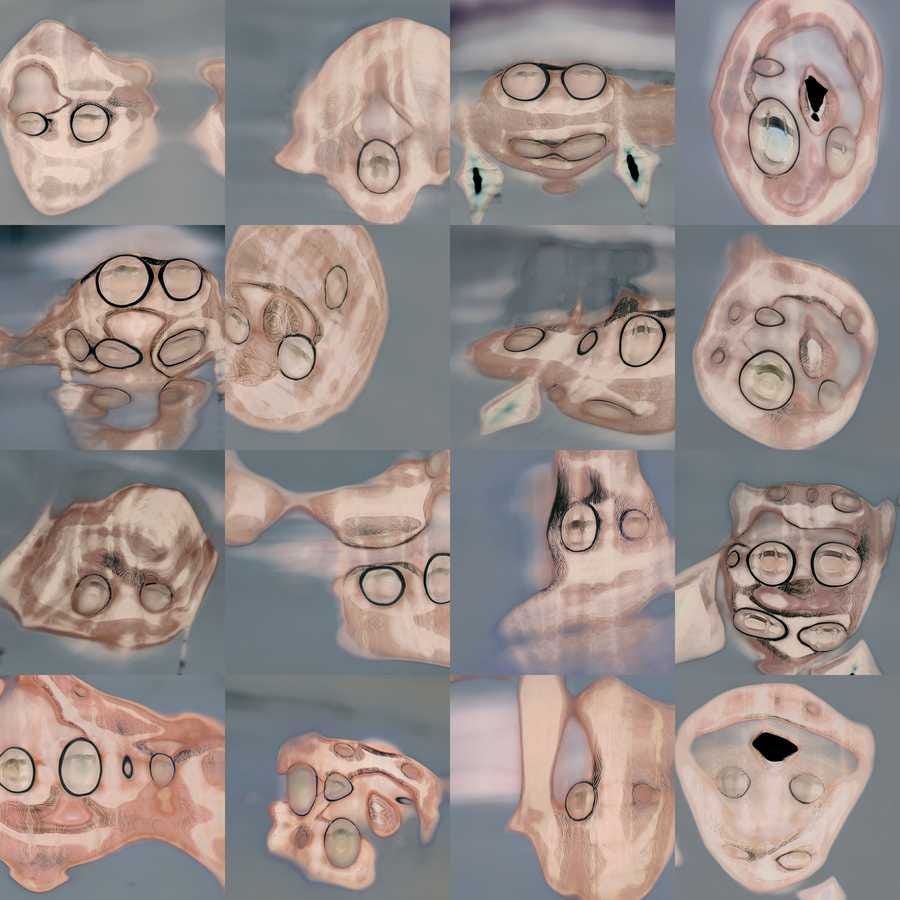

In the Cosmic Insignificance Therapy video from last week, I slowly zoom out over one minute on an undulating image, rendered by an AI model. I rendered the video with code I wrote in the Python language. To do that zoom, I had to express it mathematically.

I vaguely remembered that to animate a zoom, I needed a logarithmic interpolation function. I couldn’t be bothered to work it out myself so I asked ChatGPT. It spat out the answer:

min * (max/min)^t

I stared at this a moment. I hadn’t thought it could be expressed so simply. I was expecting at least a few logarithms in there. I had a flashback to sitting in my A-Level maths class 20 years ago learning geometric and arithmetic series. (See what I mean about the relationship between knowledge and a sense of place?) In my head, I substituted in t==0 (we get min) and t==1 (we get max). All looks OK. I’ll remember this. ChatGPT just taught me something.

This is an optimistic scenario of AI collaboration. I’m still using my brain and ChatGPT is helping me do so.

Later on, I wanted to make a video where we zoom in and out to different places, then end up back where we started so that I can seamlessly loop the video. Again, I knew the name of the tool I needed: a closed cubic spline. This is a mathematically defined line that goes through whatever points you want it to, yet stays smooth and elegantly curvy.

ChatGPT complied, but this time the answer was more involved. Brace yourself.

def log_interp_spline(points, t):

n_points = len(points)

if n_points < 2:

raise ValueError("At least 2 points are required for interpolation")

points = np.concatenate(([points[-1]], points, [points[0]]))

t = t * (n_points - 1) + 1

t_floor = int(np.floor(t))

t_frac = t - t_floor

a = points[t_floor - 1]

b = points[t_floor]

c = points[t_floor + 1]

d = points[t_floor + 2]

p0 = (c - a) / 2

p1 = (d - b) / 2

coeff0 = 2 * b + p0 - 2 * c + p1

coeff1 = -3 * b - 2 * p0 + 3 * c - p1

coeff2 = p0

coeff3 = b

result = coeff0 * (t_frac ** 3) + coeff1 * (t_frac ** 2) + coeff2 * t_frac + coeff3

return result

How long did you spend with that? If you’re me last week, not very long at all. I was not whisked back to my undergraduate lecture on computer animation where I was probably taught this. I just copy-pasted it and rendered a quick video to confirm it worked.

Or did I? As I look at it now, I’m not convinced it’s in logarithmic space. So did I have to fix that? This was just last week, and I can’t remember. This is alarming. It seems I was coding with ChatGPT like I drive with satnav. I feel the tool dependency. My mind atrophies.

IV

In a sense, ChatGPT is the opposite of a satnav. I’m the one plotting our route, barking out orders. Delegation is not a crime. It’s a skill, a subtle one at that, and one I often see myself fall short on. I’d get little done if I followed each intellectual tangent on my way.

But, as an artist, I make stuff that nobody asked for. In doing this, I hope to reveal something in the process that I could not have just thought up without getting my hands dirty. Each act of delegation creates distance to some part of the medium. It transforms what I might uncover in my intuitions, reducing the terrain but hopefully giving me time to go deeper.

When delegating, the question is not just what will maximise my output? but also what parts of this process do I need to experience myself?

We all need to choose a level between director and craftsman, but it’s a question I hope to keep forefront in my mind as I continue.

Tim

Montreal, 7 April 2023